Instructors (including CCX coaches) want to know: what answers did my students give to the questions in my course? This applies to multiple choice questions, free text questions, essay questions, polls, and more. Unfortunately there is currently no nice tool for instructors to view student answers.

The status quo is:

- Insights shows the distribution of student answers for certain problem types (basically multiple choice -like questions only), but it doesn’t say which students chose which answers - it is optimized for huge courses, not small courses.

- The Instructor dashboard provides a method for downloading a CSV containing student data for any given problem, but the data is difficult to interpret (idiosyncratic JSON format) and is only available for one problem at a time.

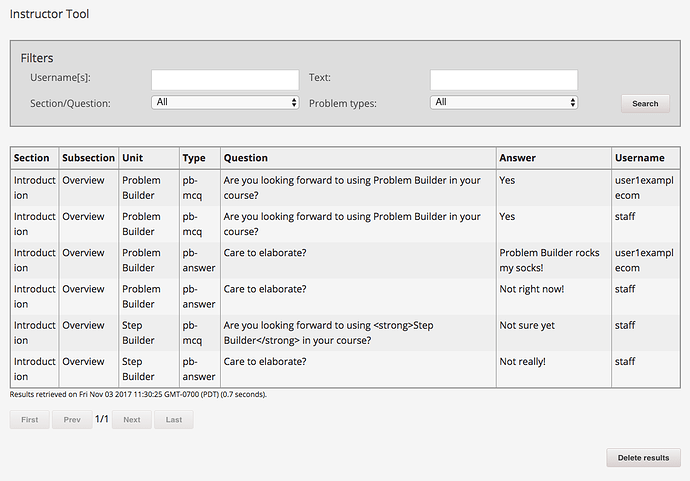

- The Problem Builder XBlock provides a nice reporting tool that provides most of the desired reporting functionality, but it only works with problem builder XBlocks - it doesn’t report on other types of XBlocks. Setup also requires that it be added to a staff-only section of the course, which is a bit unintuitive.

- The poll XBlock provides built-in reporting and CSV downloading, but again it is only for Poll XBlocks and it uses yet a different interface.

Proposed Solution:

The proposed solution is essentially to take the “Instructor Tool” functionality that exists in Problem Builder, make it a built-in feature by moving it to the Instructor Dashboard, and make it compatible with any type of XBlock (not just Problem Builder).

Screenshot (showing features that exist today):

What this will provide:

- One consistent tool to use whenever an instructor wants to know what their students have answered for any problems in the course.

- Works regardless of how each XBlock stores its data, as XBlocks can use a plugin interface to add their reporting capabilities to this tool

- View a report for the whole course, just a section, or just a specific problem - flexible scope (already implemented and working)

- Can easily view results online, or download as a CSV report (already implemented and working)

- Can view answers from a specific students, or from all students (already implemented and working)

- Will work for CCX coaches to view their students answers in a CCX course (already implemented and working)

Implementation details:

- Installed XBlocks can indicate that they are “reportable”.

- The new Reporting Tool would iterate over all installed XBlocks to determine which ones can be reported on

- When generating a report, an asynchronous celery task will iterate over all selected XBlocks+users and call

Block.generate_report_data(course_key, block_key, get_block, user_ids, match_string) - This must be a static method that can return an arbitrary number of labelled columns to be included in the report (e.g. most will return a “Question” and an “Answer” column).

- The

get_block()method can be used to return a fully-instantiated version of the XBlock within the LMS runtime and as the user in question; however this will be inefficient so should only be used if necessary. In general, the submissions API or theXBlockUserStateClientshould be used instead.

)

)